For over a decade, “Big Data” has been the buzzword that drove enterprises to adopt complex, expensive infrastructures designed to handle seemingly massive data volumes.

However, as recent research and industry insights reveal, the actual data sizes processed by most enterprises are far smaller than the Big Data narrative would have you believe. This has led to a growing realization that the traditional Big Data approach may be overkill for most organizations, resulting in inefficiencies and wasted resources.

Source: [MotherDuck], [Mckinsey]

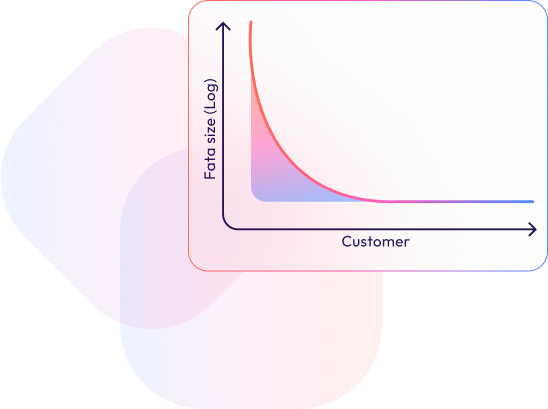

Large enterprises typically process data sizes ranging from 10 to 100 GB.

The median data storage size is often below 100 GB

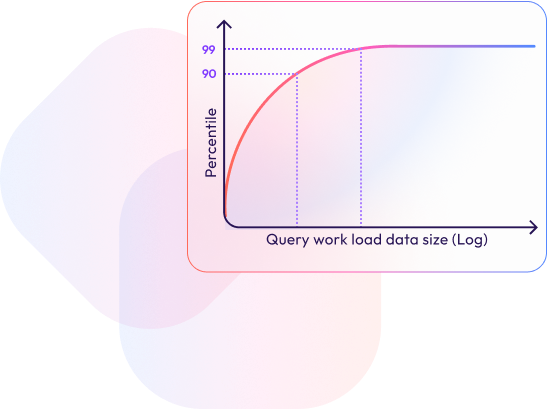

Analytical queries usually involve data sizes under 100 MBper query.

By 2025 Data assets are organized and supported as products.

Despite the hype, the average data size processed by large enterprises typically ranges from just 10 to 100 GB. Even companies that handle substantial amounts of data rarely exceed a few terabytes of active, operational data. As Jordan Tigani points out, most businesses do not generate the multi-petabyte datasets that would justify the use of extensive Big Data infrastructures like Apache Spark or Snowflake

“There were many thousands of customers who paid less than $10 a month for storage, which is half a terabyte. Among customers who were using the service heavily, the median data storage size was much less than 100 GB.”

Many enterprises that adopt Big Data tools find themselves underutilizing these platforms. Data warehouses in several companies are significantly smaller than the marketed capacity of these tools, often managing less than a terabyte of data. Moreover, most analytical queries executed on these platforms involve data sizes much smaller, frequently under 100 MB per query. This leads to a situation where businesses are paying for capabilities they rarely, if ever, need

“90% of queries processed less than 100 MB of data.”

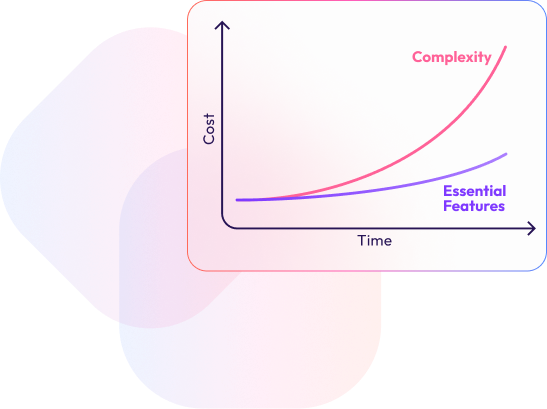

Building and maintaining Big Data infrastructures comes with high costs—both in terms of financial investment and operational complexity. Large-scale data processing platforms require significant resources, including specialized staff, to manage and maintain the infrastructure. Yet, the benefits of these investments often do not align with the actual data needs of the organization, leading to inefficiencies and wasted resources.

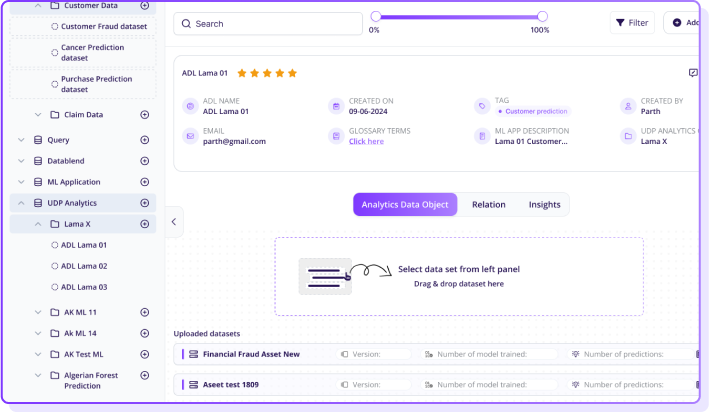

xAQUA Analytics Data Lake offers a transformative solution by leveraging the power of modern, intelligent technologies like Generative AI, Active Metadata, Apache Arrow, DuckDB, and SQL to streamline data integration, transformation, and analytics at scale.

Drive Decision Intelligence from Siloed Data in Real-Time with xAQUA On-Memory Analytics Data Lake – Say Goodbye to Data Warehousing and Complex Clustered Infrastructure!

Experience the Power and Simplicity of Integrating, Analyzing, Transforming, and Delivering Data Products and Insights from Diverse Data Sources without a Data Warehouse.

Welcome to xAQUA Analytics Data Lake (ADL), a revolutionary platform designed to eliminate the complexities of traditional data processing. Whether your data resides in the cloud, on-premises, within SaaS applications, or in cloud object stores, xAQUA ADL seamlessly brings it all together into a unified, in-memory data lake.

Without writing Code, Powered by AI Co-pilots!

In conclusion, the narrative around Big Data is being redefined. While the myth of needing vast infrastructure for massive datasets persists, the reality is that most enterprises can achieve their data goals with far more streamlined, efficient solutions. xAQUA Analytics Data Lake provides this solution, aligning with real-world data sizes and delivering value where traditional Big Data tools often fall short.